Impact measurement tools: using Government and other nationally developed questions in your surveys

Why and how to use resources and evidence from the Office of National Statistics and other verified sources to improve your impact measurement and needs analysis, including a download of suggested indicators

Research and evidence has always been important to me in how I work, for my own interest and to improve the activities of the people and organisations I work with. For example, after undertaking an impact assessment for a project working around loneliness I wanted to dig deeper into the research about what affected loneliness and isolation and what was most likely to work in service design. A deeper dive into the topics I work around adds extra value to my work with clients as a result of this knowledge base.

This blog gets into the weeds a bit about some of the practical issues you might encounter when using these type of indicators - you may not be interested in all of it, but different sections are titled so that you can pick out what you want. A pdf of suggested indicators is available in the link below. One of the main factors is making these fit with the outcomes of your programme and that people accessing your service want (so consult them on what's best!) - if they are meaningfully linked in with assessment and review for people, they are most likely to be implemented usefully and correctly.

I was interviewed earlier in the year by the Campaign to End Loneliness for a Department for Culture, Media & Sport report on the Evaluation of interventions to tackle loneliness that discusses some of the issues in this blog, but most of all recommends that there is more support provided to the sector to understand, select and implement various measures.

I don't want to read the blog, can I just download the indicators?

The value of adding standard survey questions

When developing impact frameworks and tools I generally encourage organisations to use indicators that are already developed by other organisations. The main advantages to this are:

- They have already been robustly tested with a wide audience to ensure that the questions are understandable, are valid - i.e. they measure what they are supposed to including minimal overlaps between different questions in the same instrument, and are reliable - i.e. they give the same answer in the same situation over time. (As an example, lack of reliability is a common criticism of the the Myers-Briggs Type Indicator, which people may be familar with. Whilst really useful in some situations to help people understand relationships and dynamics in teams - I have run a workshop on it deconstructed, categorising people into 16 groups may not be so useful. People may be familar with taking it on different occasions and getting different results, although research shows it to be more reliable than people often believe). Note that not all indicators will have been developed to measure before and after change as opposed to taking a snapshot at a particular time, but they are commonly used in this way by a variety of organisations.

- There are robust benchmarks to compare against - for example the Community Life survey measures the Office for National Statistics four wellbeing questions and the single loneliness question. There is also a Government dashboard of various questions and current measurements. With any of these, data changes from year to year, so you should always check for the most up-to-date data. You can use this data immediately right at the beginning of any project to demonstrate that your clients have particular needs, with scores below national averages.

There is currently a review of national wellbeing indicators being undertaken by the Government, which may change and/or add indicators.

Which questions do I recommend to clients most frequently?

I often mix and match questions from different places. Many instruments are designed to be used as a whole rather than in this way (although not the questions that I'm recommending below) - but again, for the purposes that most charities need them this is not likely to be a problem. I most commonly recommend the ONS four wellbeing questions and the single loneliness question from the Government's loneliness strategy. I also recommend questions from the Community Life Survey. These choices are partly because of the benchmarks, as above, but also because they are questions that are used with the general public, where some questionnaires feel that they are pathologising people and only used where a deficit is perceived.

Like many charities, I used to recommend the short (7-item) or longer (14-item) Warwick-Edinburgh Mental Wellbeing Scale, but found some indicators problematic: asking older people aware they might be in the last years of their life whether they feel optimistic about the future seems insensitive; people can struggle to understand what feeling useful means (and in any case it seems to espouse a particular type of Protestant work ethic - why should someone feel as though they need to be useful?! Perhaps not a priority when you're coping with poor health or poverty) - and feeling close to other people could feel wrong for women experiencing domestic abuse or coercive control when actually not feeling close to someone abusive is the aim. There are also issues with licencing that many organisations are unaware of, as is the case with other academic or commercial surveys.

Common issues and problems you might face and what you can do about them

Choosing questions with different measurement scales

Strictly speaking, the questions and answers should have the same phrasing as the version that you are copying from, but sometimes you might decide that questions from different places are perfect for your particular project, but the choices for answers are phrased differently, one asks people to rate their answers from 0-10, another asks you to agree or disagree with the statement, and a further has five answers from never to always. This can be confusing for people (although on the other hand it does make them read the questions properly rather than just answering "slightly disagree" to everything).

As you are probably not conducting academic research, making slight changes for consistency is one solution - it does mean that it may be more difficult to benchmark your answers (though not impossible to do an approximation to this with a note about your methodology) but it does mean that you are asking the questions that you think matter. An example of doing this is

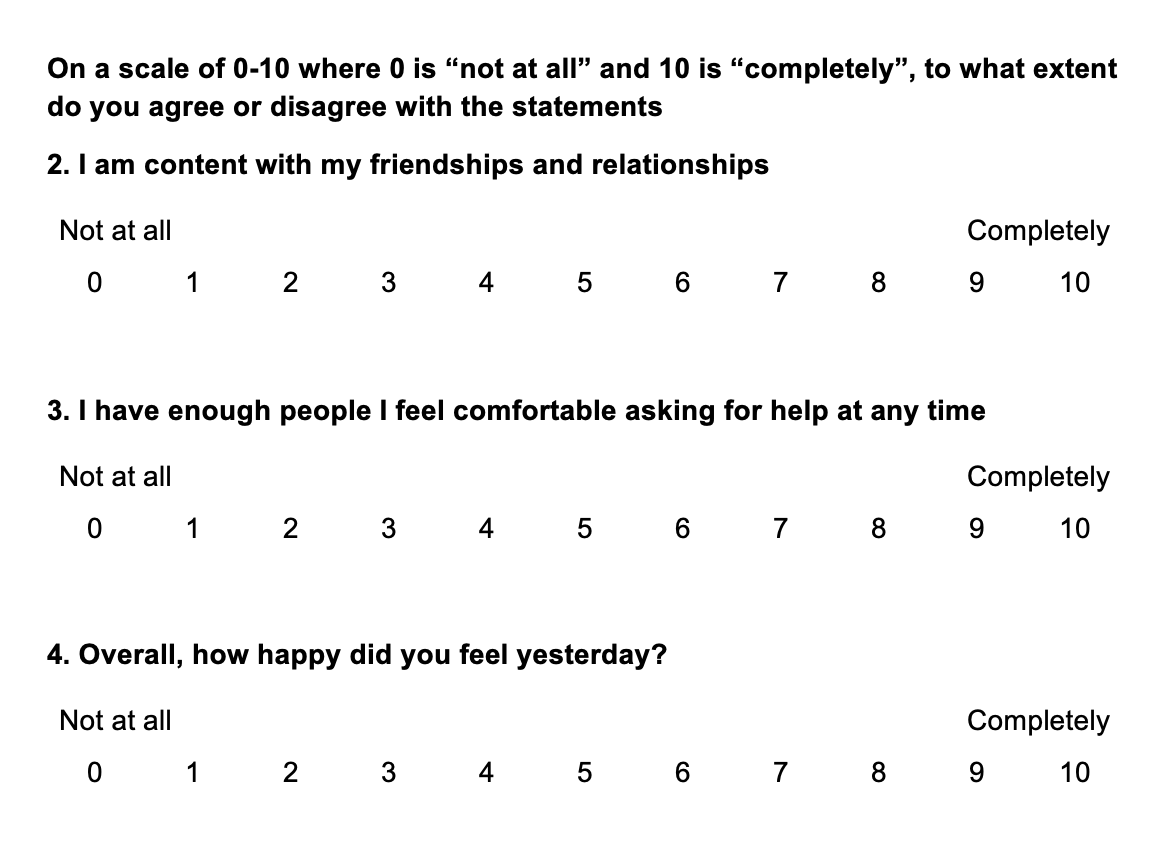

VASL's Community Champions project - they chose five key questions. For three we kept the original answers - we felt that the single loneliness question was important that it stayed as it was, as the project is focused around loneliness. Another of the indicators we felt didn't fit well with the 0-10 format. We adapted two other questions though so that the scale (0-10) was the same as for the ONS wellbeing happiness question. For the "I am content with my friendships and relationships" question from the Harvard Flourishing scale this was a minimal change from renaming the 0-10 "strongly disagree" to "strongly agree" to "not at all" to "completely". For the "I have enough people I feel comfortable asking for help at any time" this was a larger change as the original scale was purely text based and has five points from strongly disagree to strongly agree. These questions are below.

Not being able to get a consistent before and after

The idea behind these scales is taking two or more measures at different times to measure the change between them. If you're undertaking ongoing keywork with someone it can be relatively easy to get a before, during and after measurements of distance travelled, and can be done as part of a broader review process that helps people reflect on what has changed for them - in this way it can be meaningful for the person surveyed and not just something that they do predominantly for the benefit of the organisation. Although note that people often find endings difficult and may not turn up for a final session, so it's good to do the scale before this.

However, sometimes getting two or more measures is difficult, an example from my experience have been measuring changes amongst volunteers, some of whom complete surveys post-training and some complete annual surveys, but don't necessarily complete both, and it can be fiddly and time consuming to link up respondents unless you have a database set up for this purpose. Another example is Growing Together, community centre in Northampton where people may drop in repeatedly, but on a casual basis and so being consistent about surveying people at different times can be difficult.

It may be possible to be more organised about getting people to fill things in - for example getting volunteers to complete a feedback survey before they can start volunteering - this can be combined with a general feedback questionnaire about their experience of recruitment and induction and you can make sure that not all questions are compulsory so that whilst you're getting them to look at it, you're not compelling them to answer every question, which doesn't quite fit with the spirit of volunteering!

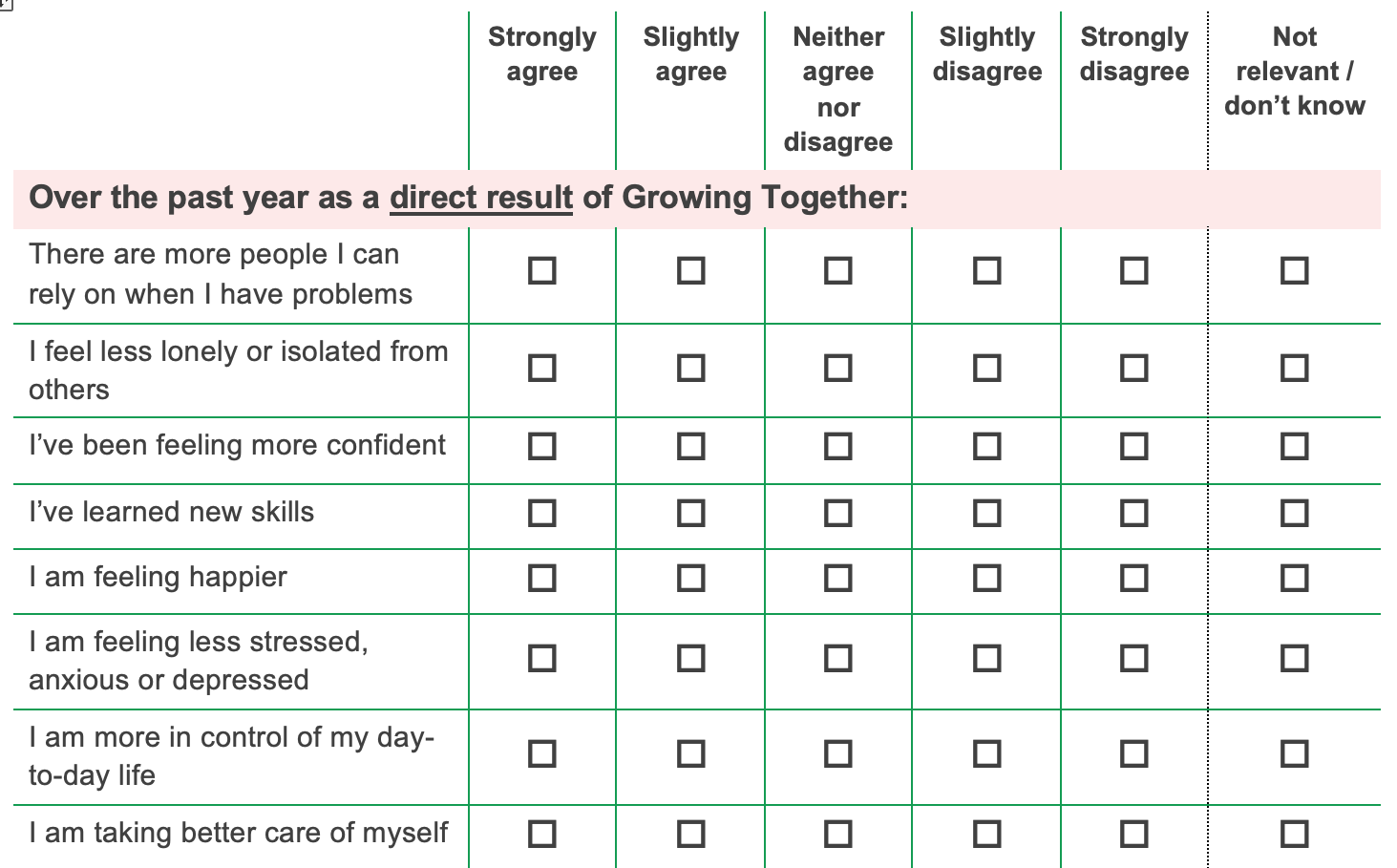

Another alternative is to adapt questions so that you are only asking them at the end of a time period and not doing a before measure - you can use this at an event or choose a week/two weeks/a month in which you'll focus on asking everyone accessing the service the same questions. Here is an example of a form designed with Growing Together,

Using questions repeatedly with the same people and not seeing movement after the initial period

Often the aim of projects is to work with people for a specific length of time and then for them to move on to other things. However, there are some situations in which people are always going to need involvement such as frail older people, people with long-term conditions, disabilities or situations. In my experience, distance travelled measures can show a lot of progress initially as the person comes in needing more intense support, but then as things improve and the relationship with the service might be less intense, or about maintaining changes rather than improving things, measures can plateau. There are a few possibilities here, you could have a different measure for the first year (or whatever intervention period you are using) that uses distance travelled and then swap to something else after that. You could continue doing distance measured for everyone, but analysing it separately for people who have been involved for different lengths of time. Or you can switch to a single measure rather than distance travelled as above.

Stopping things from getting worse but not making them better - not knowing the counterfactual - what would have happened otherwise

This is a similar problem to the one above. What would have happened anyway without the intervention is often called the "counterfactual" and links in with attribution - what your service can claim in relation to the changes and what's caused by something else, for example a health condition that would slowly improve anyway or a change in the jobs market making it easier for people to find work.

Sometimes all charities can do is to try to prevent or delay things getting worse, for example in progressive health conditions, but using before and after measures is likely to show "backwards" movement. In randomised control trials, often called the "gold standard of research" (although there are many reasons why they are not always useful!) the study population is randomised into two (or more) groups, one of which does not receive any intervention at all to see what happens to them in comparison to people who have received the intervention(s). However, this is not really ethical in day-to-day charity services! One solution is not to use distance travelled, but instead to ask questions at specific times as for the Growing Together example. If you do want to use distance travelled, then thinking carefully about the questions that are asked and where you might feel more positive change is possible, for example people feeling less stressed about their situations, that they have more people to talk to, or that they have greater connections with others in similar situations.

Testing and validation of questions has often only been done in English

One downside is that they have often only been tested in English rather than other languages. This is a problem that we ran into when running a Money Advice Service What Works? project - we recruited people who were apparently too financially excluded because of language to be able to do robust measurements because the surveys were only validated in English - the Money Advice Service weren't able to help us through this and didn't want to change the methodology so the opportunity to find out more from a population that is rarely heard from (including in this particular programme) was lost. It was also an issue that the National Lottery Community Fund ran into with it's Ageing Better programme, particularly in Leicester where we put together a project that had a huge focus on areas of the city with a large South Asian population. However, in both of these instances there was a need for more academic rigour than needed for most voluntary sector impact measurements, so translating or interpreting questions into other languages should be fine, although you might want to pilot them first with speakers of those languages to check. In any case piloting any questionnaires is generally good practice.

Scales not being sensitive enough to measure change

This is a problem that we found with the UCLA loneliness scale, also recommended in the Government

loneliness strategy. With only three points to the questions: hardly ever or never; some of the

time; often, it wasn't picking up change enough, which is why I tend not to recommend it. If you did like the statements in this, (How often do you feel that you lack companionship? How often do you feel left out? How often do you feel isolated from others?) as above you could change the answers - making them match the five-point answers in the single loneliness question recommended in the same strategy if you are using that, or using the 0-10 rating of the ONS four wellbeing questions.

Knowing what's changed but not why, and what can be attributed to your service?

Scales and measures have their place and they will tell you that a change has been made, but not necessarily why - for that you need to ask more open-ended questions about what has helped and hindered. Good questions are out of the scope of this blog, but possibly a subject for a further one if it would be useful.

Attribution is another issue as above, what might have changed anyway and is nothing to do with you? You can quantify this by asking for example what percentage of change people would attribute to the organisation as well as asking questions about what else has made a difference.

How to implement questionnaires

Also a complete topic in itself! But here are some brief suggestions:

- Provide paper and online versions of questionnaires to meet different needs.

- Make sure any online survey is functional on a mobile phone, as this may be the most common way that people complete it. Provide tablets if possible if people need them.

- Don't ask too many questions, just those that are most crucial. Having multiple choice questions can reduce the length of time it takes to get information and make analysis quicker, but you can lose detail with only multiple choice, so carefully focused open-ended questions are also helpful.

- I currently use Microsoft Forms, which is "free" (at least if you have a Microsoft subscription), or Google Forms is another free alternative. There is other software such as Surveymonkey, which has greater functionality for analysis, but if you go over a certain number of questions or responses there is a significant charge, which if you are not using it much may not be value for money.

- Where there is capacity it can be helpful to have workers or volunteers go through the scales with people. As well as the obvious advantage of motivating people to complete them, other people can often see changes that people accessing services may forget. There are issues here about impartiality and conflict of interest so people need to be properly trained, supported and monitored to implement questionnaires effectively.

- Make it clear why you're asking the questions and what benefit it might have to people accessing the service (and people who might access the service in the future), and to the organisation. Be clear about what you're doing with the information, and if possible feedback the results and what you are doing to people who have taken their time to fill it in.

- Be aware of any questions that may be distressing to people, which questions about mental wellbeing or loneliness might be, and consider how to support them. Academic surveys will have a bit at the beginning about possible detriment to respondents as part of their ethical processes, and may direct people to other sources of support. For charities, this is more likely to be support provided by the charity itself.

How Ideas to Impact can help

Support is available from a Power Hour to give people a sounding board and some pointers about what you can do to embed impact assessment into your work, through to working alongside organisations longer term as a learning partner, helping to devise an impact / evaluation framework and supporting data collection, analysis and reporting. Providing an evidence briefing for service design and funding is another option for people who don't have time or access to academic journals to do the research themselves .

Other related Ideas to Impact blogs

These are a mix of blogs about the impact process itself as well as research into evidence about loneliness and wellbeing. Let me know if exploration of other topics would be useful.

What should I put in my impact report?

The purpose of impact data collection: thinking through what you need and why

Befriending and the psychology of loneliness - research and evidence briefing

Why social isolation has an impact on health even if you don't feel lonely

Research on different types of loneliness and what this means for services

Other wellbeing indicators resources

Please let me know if there are other links that you have found that are useful

What Works Wellbeing measures bank

Is there any other good practice that people would like to suggest based on what's worked for you, or any topics that it would be useful to have further information about? Let me know!